Job Scheduler

The Job Scheduler enables you to schedule, monitor and control the running of the Apps, Excel Addins, Services, Batch Jobs and Jupyter Notebooks that have been created in Datatailr. You can see the result of a batch run, get debug information, terminate them and so on.

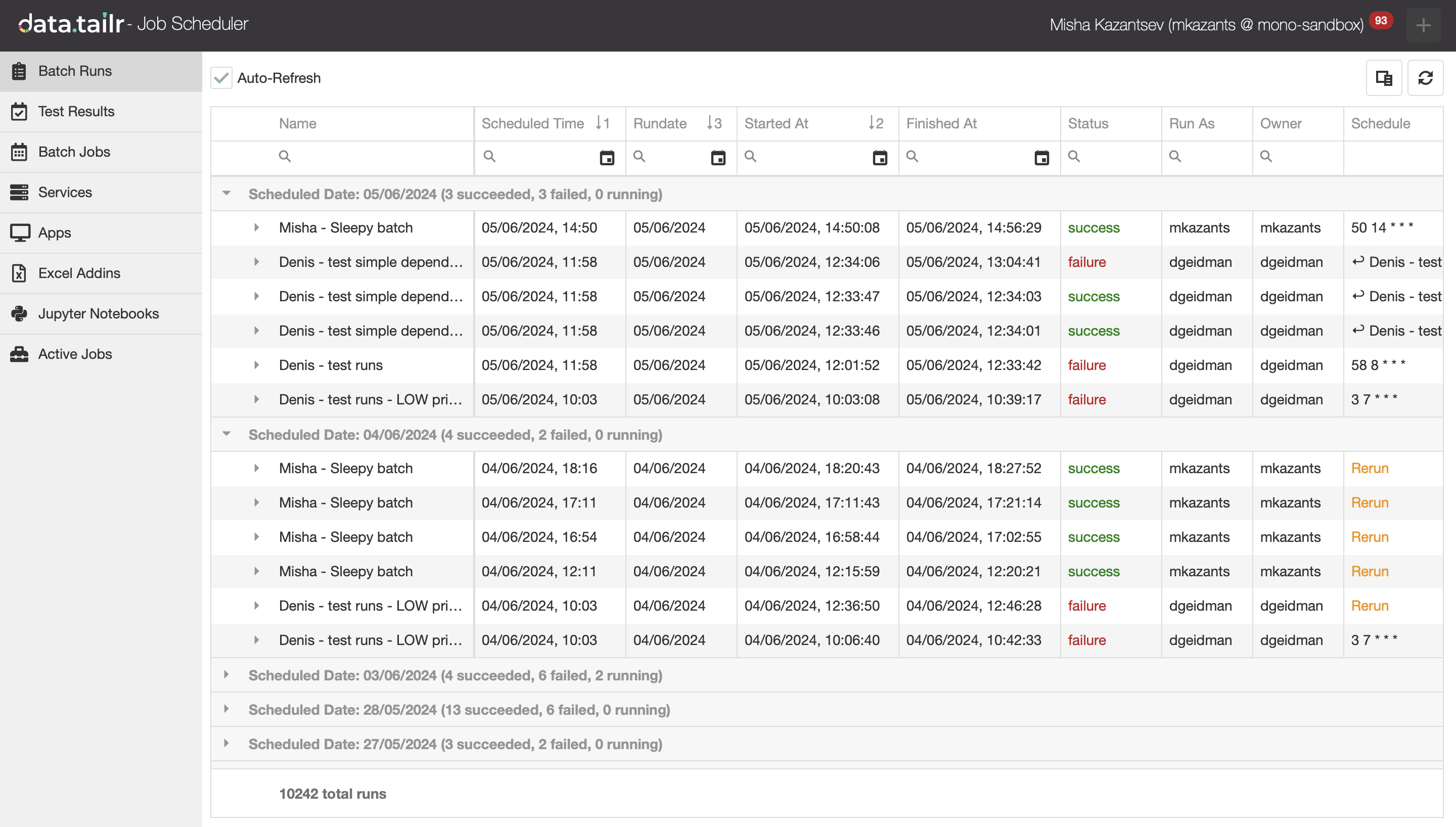

Click the Job Scheduler  icon. The following is displayed –

icon. The following is displayed –

Let's cover the sections that are provided in the left pane.

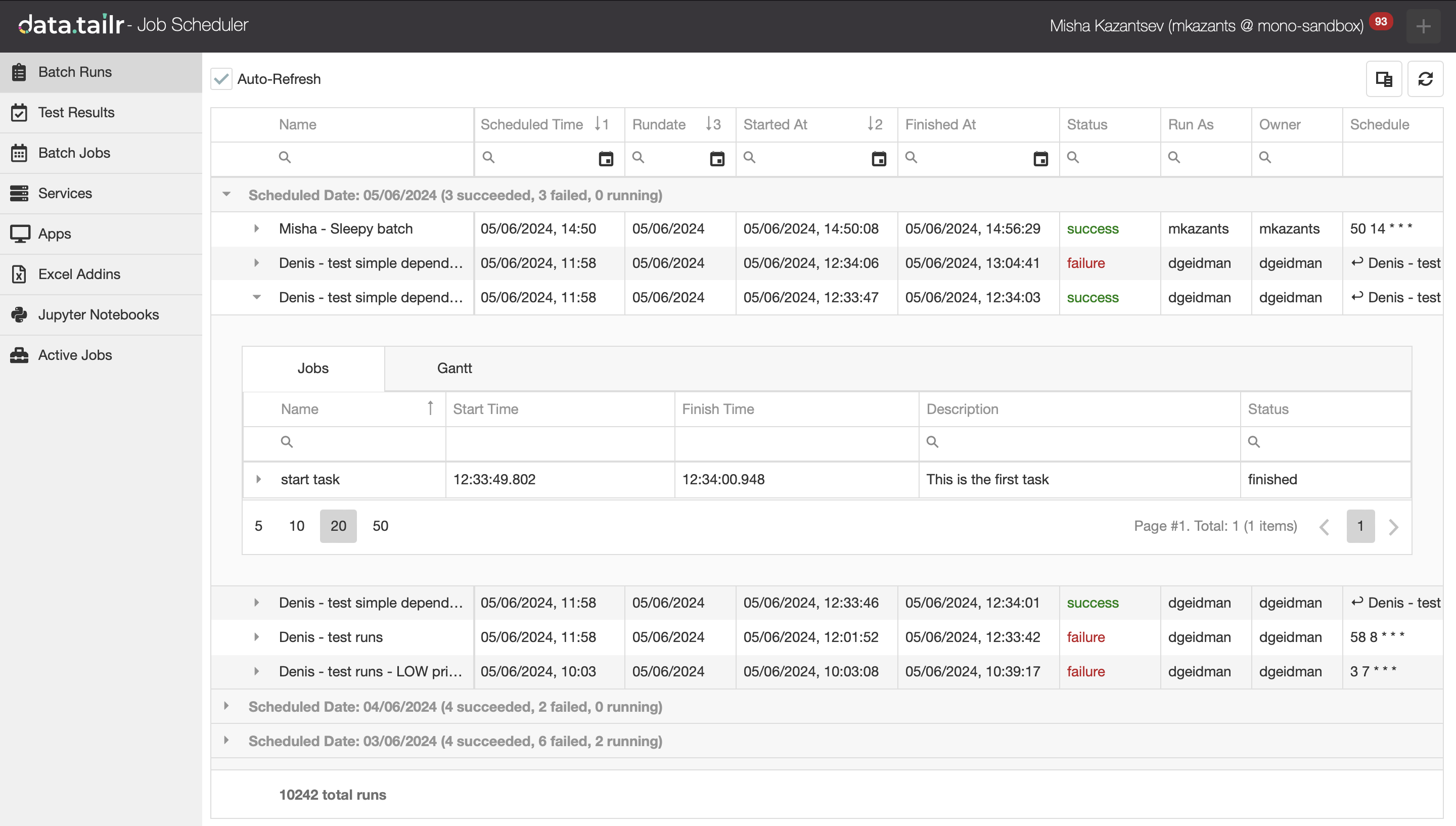

Batch Runs

This section lists all the Batch Job runs defined in Datatailr and enables you to display a wide variety of details about each Batch Run and each job run in every batch, as well as the job logs, error messages and configuration.

List of all Batch Runs

The top of the Batch Run list shows a row for each batch that was run on the current day. This is followed by a row for each previous day (Runs on: dd/mm/yyyy) that can be expanded to show all the Batch Runs on that day.

The following columns of information are included for each Batch Run –

-

Scheduled Time – Specifies when this batch was supposed to start running.

-

Runtime – Specifies when this batch actually started running.

-

Finished at – Specifies when the batch is completed.

-

Success – Indicates that all the jobs in the Batch Run were completed successfully. The batch fails if even a single job in the batch fails. Possible statuses here are: running, success and failure.

-

Run as – Specifies the user as which the job was executed in the Batch Run. This determines the permissions awarded to the job, such as retrieving encryption keys from a database.

-

Owner – Specifies the owner of the Batch Job that was run.

-

Group – Specifies the user group of the Batch Job that was run.

-

Tag – Specifies the Datatailr environment in which this Batch Job was run – Dev, Pre or Prod.

-

Schedule – Specifies the schedule that this Batch Job was defined to run with.

-

#Jobs – Specifies the quantity of jobs in this batch run.

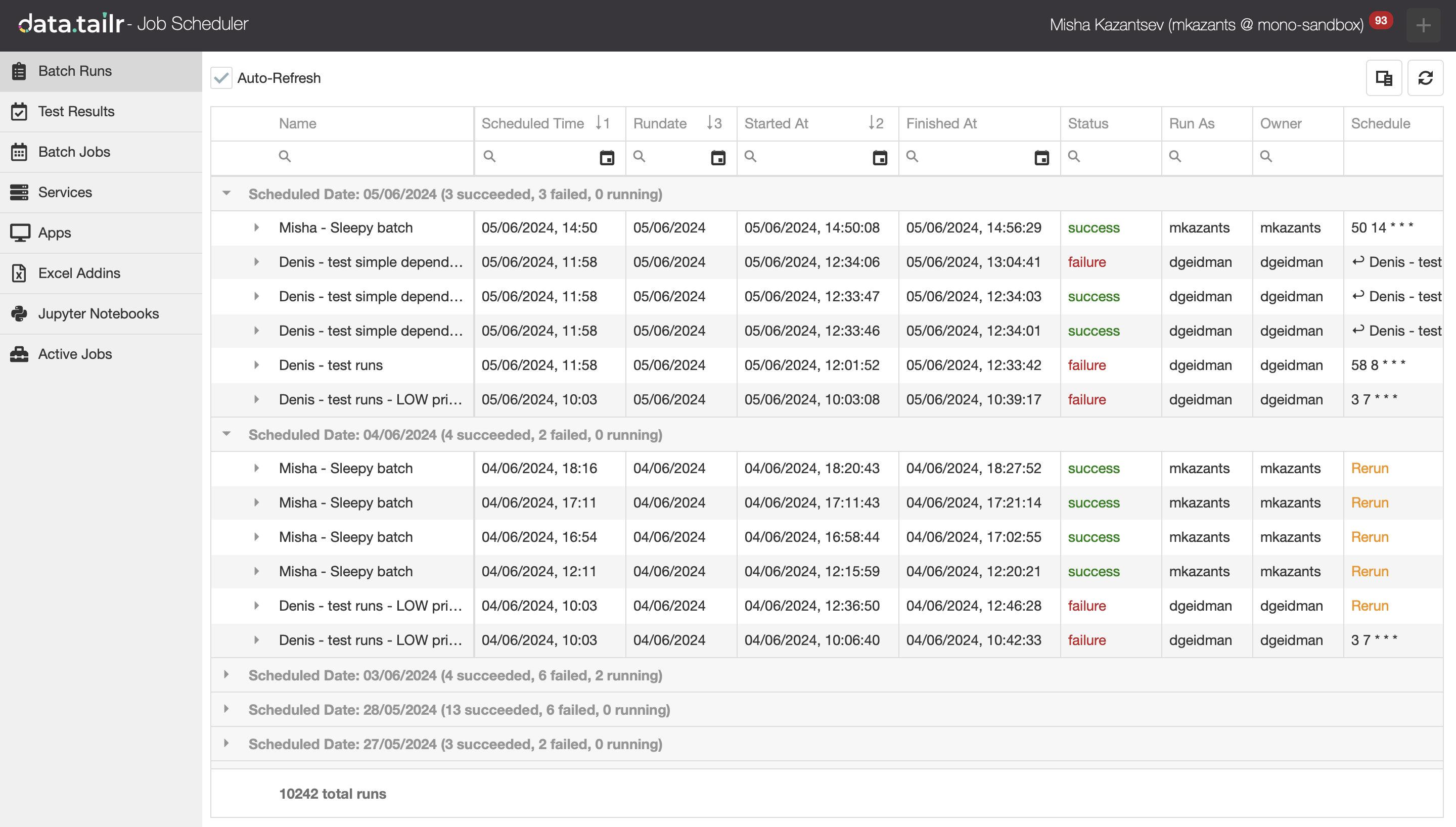

Batch Runs Right-click Menu

The following right-click menu options are provided –

-

Rerun – If a Batch Job failed, then this option can be used to rerun the parts of the batch that failed. This option is not available if the Batch Job is completed successfully.

-

Rerun (Clean) – Enables you to rerun the entire batch from scratch.

-

Rerun (Clean with selected images) – Enables you to rerun the entire batch from scratch with specified image version.

-

Logs – Enables you to view the stdout log file (which is the standard output file that stores the output stream of process messages for subsequent analysis) and the stderr log file (which is the error file that stores the output stream of error messages).

-

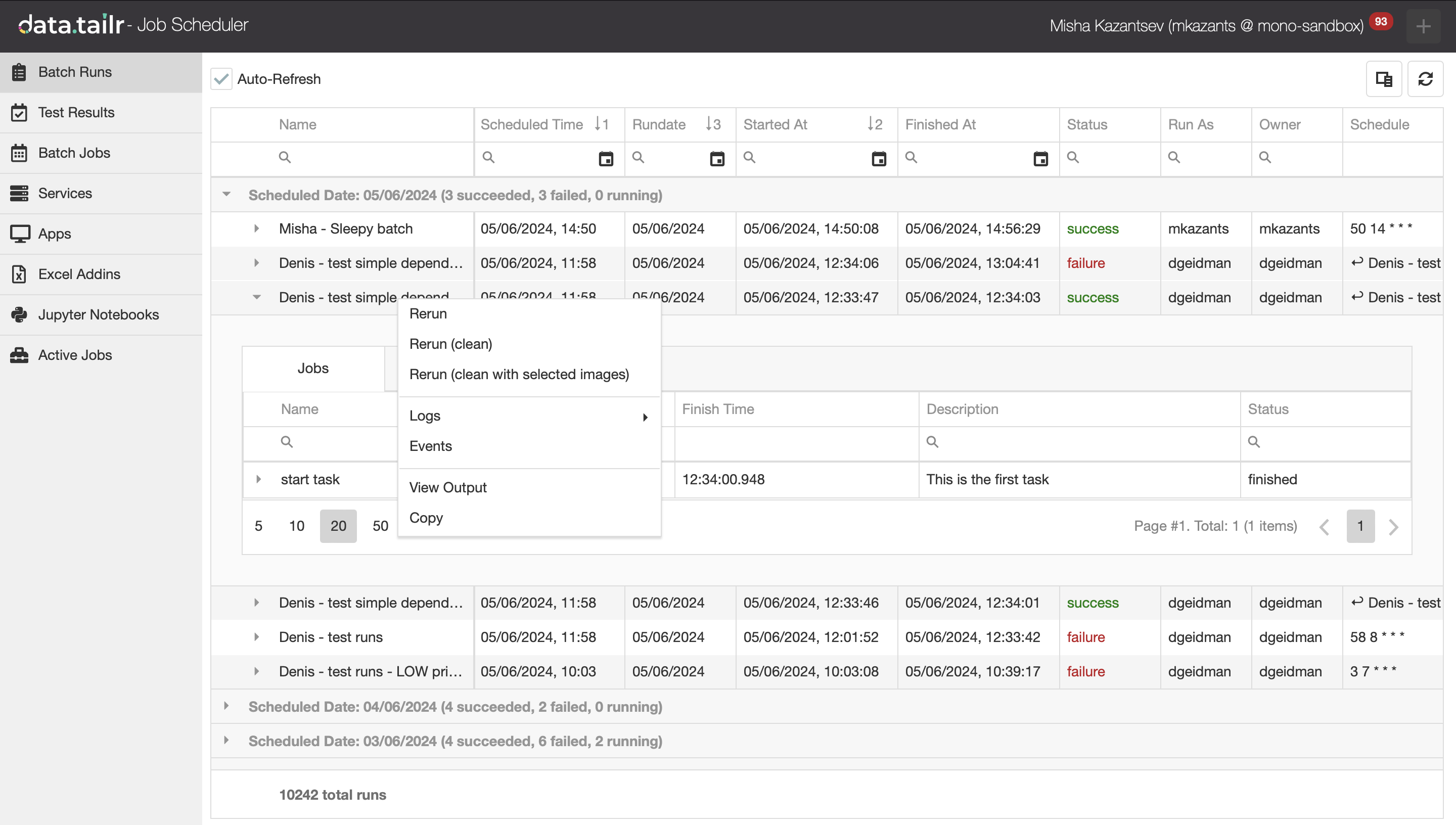

Events – Displays the scheduling events of a batch. The following is displayed in which you can select one of the jobs in the batch and see the events of its run –

-

View output – Displays the Python or JSON object saved by this app to S3. If a large file was saved to S3, it may take a few moments before it is retrieved and displayed.

List of Jobs in a Batch Run

To see the list of jobs that ran within a specific Batch Run –

In a Batch Run’s job list, you can click on the left of each job’s row to expand it and display two tabs of information – Jobs and Gantt, as described below –

-

Jobs – Shows a row for each job run in the batch, as shown below –

The following columns of information are included for each job in the batch –

- Name/Description – Specifies the name and a description of what the job does.

- Start Time/Finish Time – Specifies the time when this job started and finished running.

- Status – Specifies whether given job in a batch is finished successfully, failed or is still running.

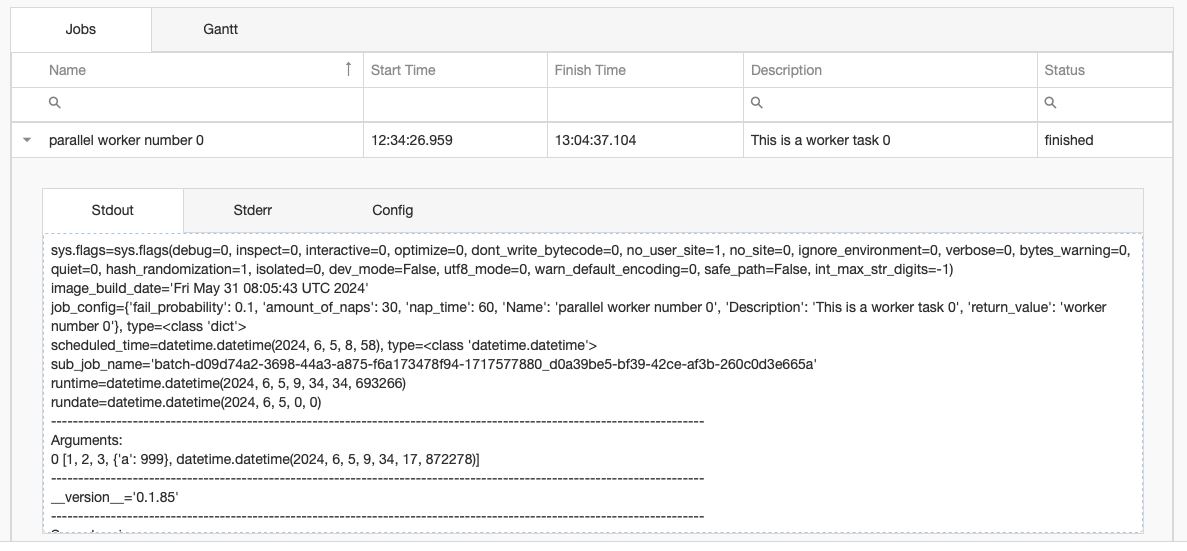

Additionally, any individual job in a Batch Run can be expanded to see its details – click on the left of each job’s row to display three tabs describing it – Stdout, Stderr and Config, as described below –

-

Stdout and Stderr – Shows the standard out (stdout) and the standard error (stderr) of the job –

-

Config Tab – Displays the JSON of the configuration file used during this Batch Run. To change the configuration of a Batch Run, see Editing a Batch Job in Batch Jobs. You can then reschedule the job and run it with this new configuration.

-

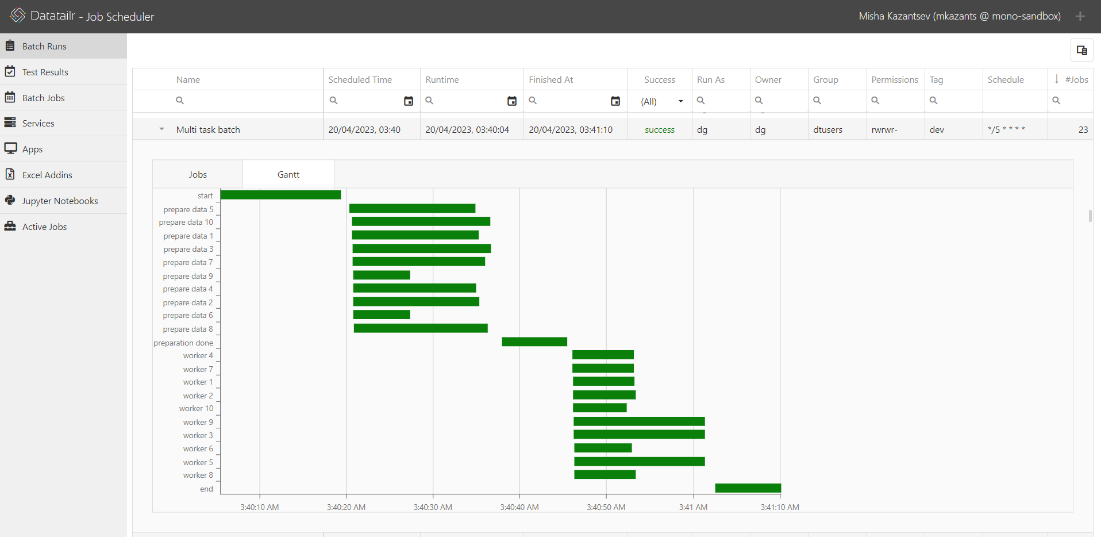

Gantt Tab – Displays a Gantt chart that visually represents the start and end times of each job in the Batch Run, along with their respective statuses. Gantt charts are updated close to real-time – green bars indicate completed jobs, yellow bars indicate running jobs, while red bars indicate failed ones.

The duration of each bar in the chart offers a quick overview of the job’s running time. Additionally, the positioning and overlapping of the bars serves as a visual indicator of the jobs’ dependencies.

This visualization enables you to verify that dependent jobs only commence after the completion of their preceding jobs. If they don’t, then it's a clue to fix dependencies in the Batch Job and reschedule it to run again.

Test Results

This option shows the results of periodic regression tests that have been run manually or have been scheduled. Each row represents the results of the test, which you can double-click to see its pytest output which provides details about its run, such as why it failed, which can help you debug your program.

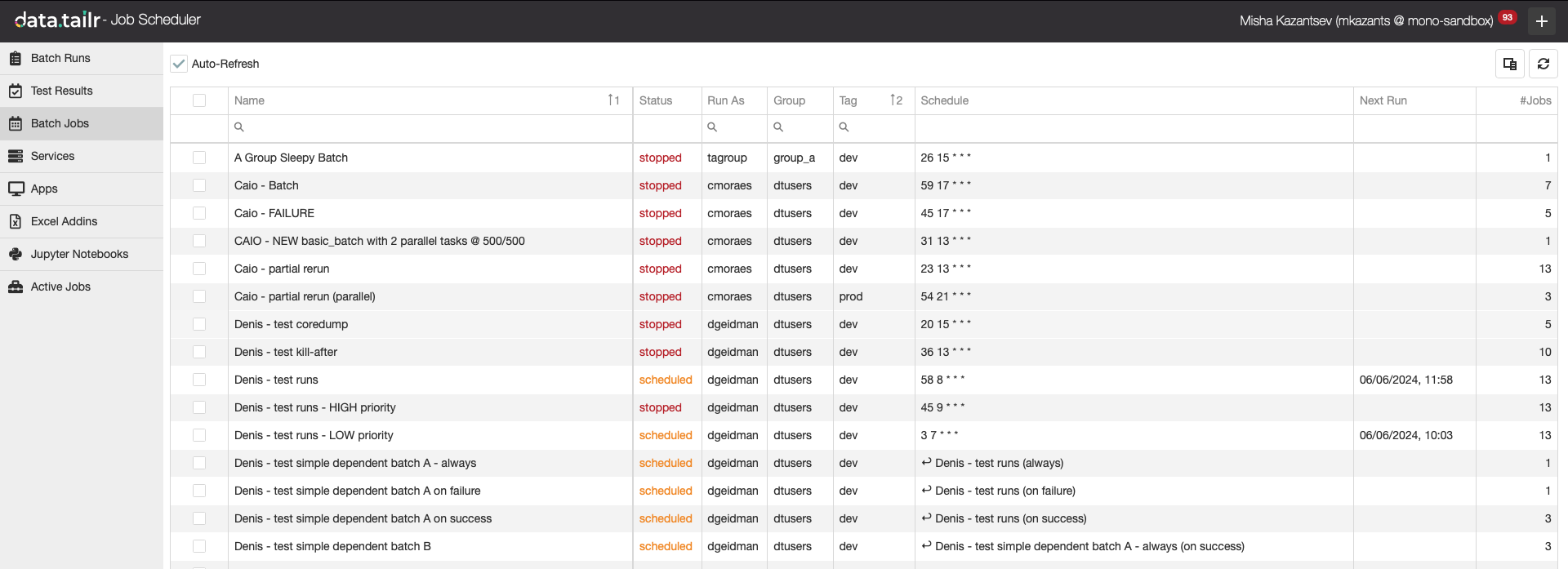

Batch Jobs

This option enables you to create or manage batch jobs to be scheduled by Datatailr to run automatically.

Note – Instead of using the following Datatailr user interface features to schedule a Batch Job, you can schedule a Batch Job programmatically, as described in Scheduling a Batch Job Programmatically.

The following columns of information are included for each Batch Job –

-

Name – Specifies the free text name assigned to this Batch Job.

-

Status – Specifies whether the batch is scheduled to be run, or is in stopped state.

-

Run as – Specifies the user as which the job will get executed in the Batch Run. This determines the permissions awarded to the job.

-

Owner – Specifies the owner of the Batch Job.

-

Group – Specifies the user group of the Batch Job.

-

Permissions – Defines the access (Read and Write) permissions of the users who can access this runnable. You may refer to Permissions in Datatailr for more information.

-

Tag – Specifies the Datatailr environment in which this batch job is defined to run – Dev, Pre or Prod.

-

Schedule – Specifies the schedule according to which this Batch Job is defined to run in Cron format.

-

Next Run – Specifies when the next scheduled run of this Batch Job is going to happen.

-

#Jobs – Specifies the quantity of jobs in the Batch Job.

Editing a Batch Job

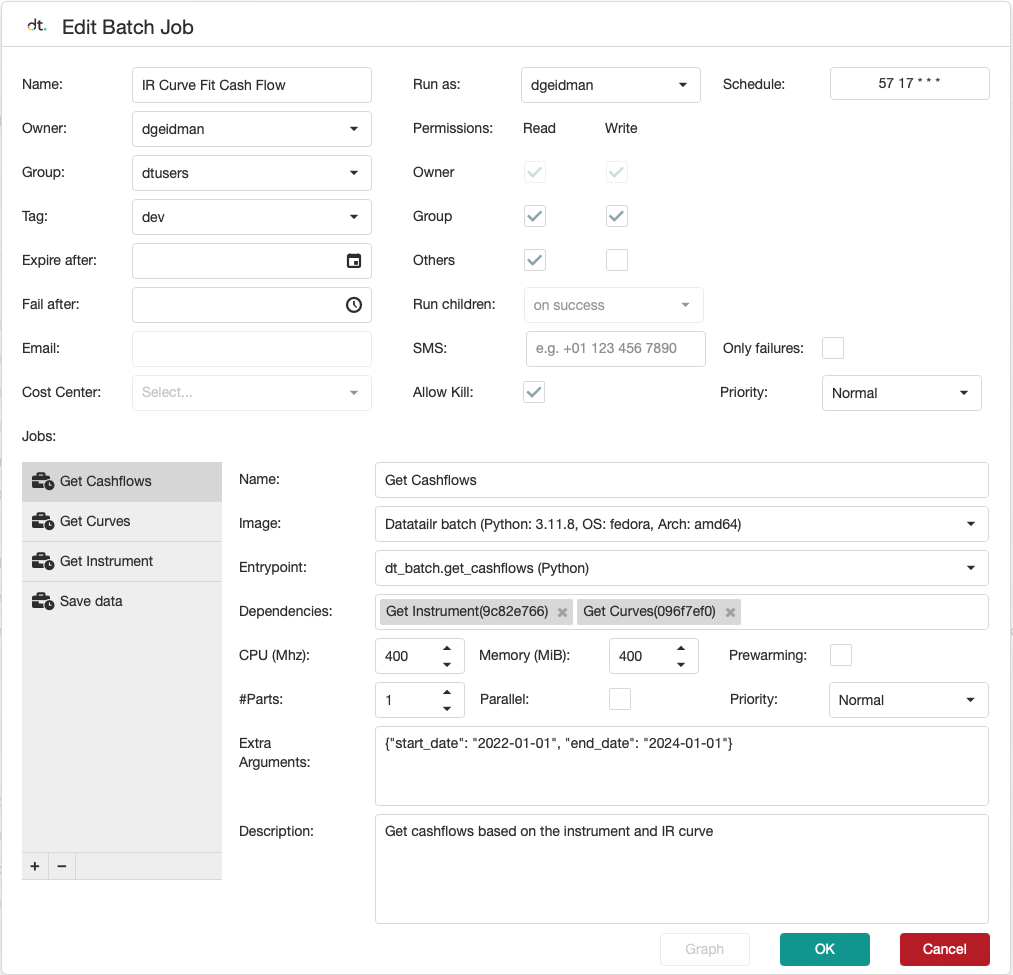

Double-click on a Batch Job’s row to display its details, which also enables you to edit them, as shown below –

-

Name – Specifies a free text name for this batch job.

-

Owner/Group – Specifies the owner of this batch job group.

-

Tag – Specifies the Datatailr environment in which this batch job is defined to run – Dev, Pre or Prod.

-

Expire After – Specifies that upon reaching this expiration date, this batch job automatically transitions from scheduled to stopped to prevent future runs.

-

Fail after – The Scheduler will terminate a job if its running time exceeds the value specified here.

-

Email and SMS – Specifies the recipient(s) of notifications upon completion of this batch job. Marking the Only failures checkbox specifies that both types of notifications are only sent upon the failure of a Batch Job. The Telephone Number field accepts any fully qualified internationally recognized format that includes the country code, not just US format.

-

Cost Center – Select one of the cost centers to which the owner(s) are allowed access that were defined in the Cost Manager in order to allocate the cost of running this job to it.

-

Run as – IMPORTANT! Specifies the user as which the process is executed in the batch. This determines the permissions awarded to the batch, such as retrieving encryption keys from a database.

-

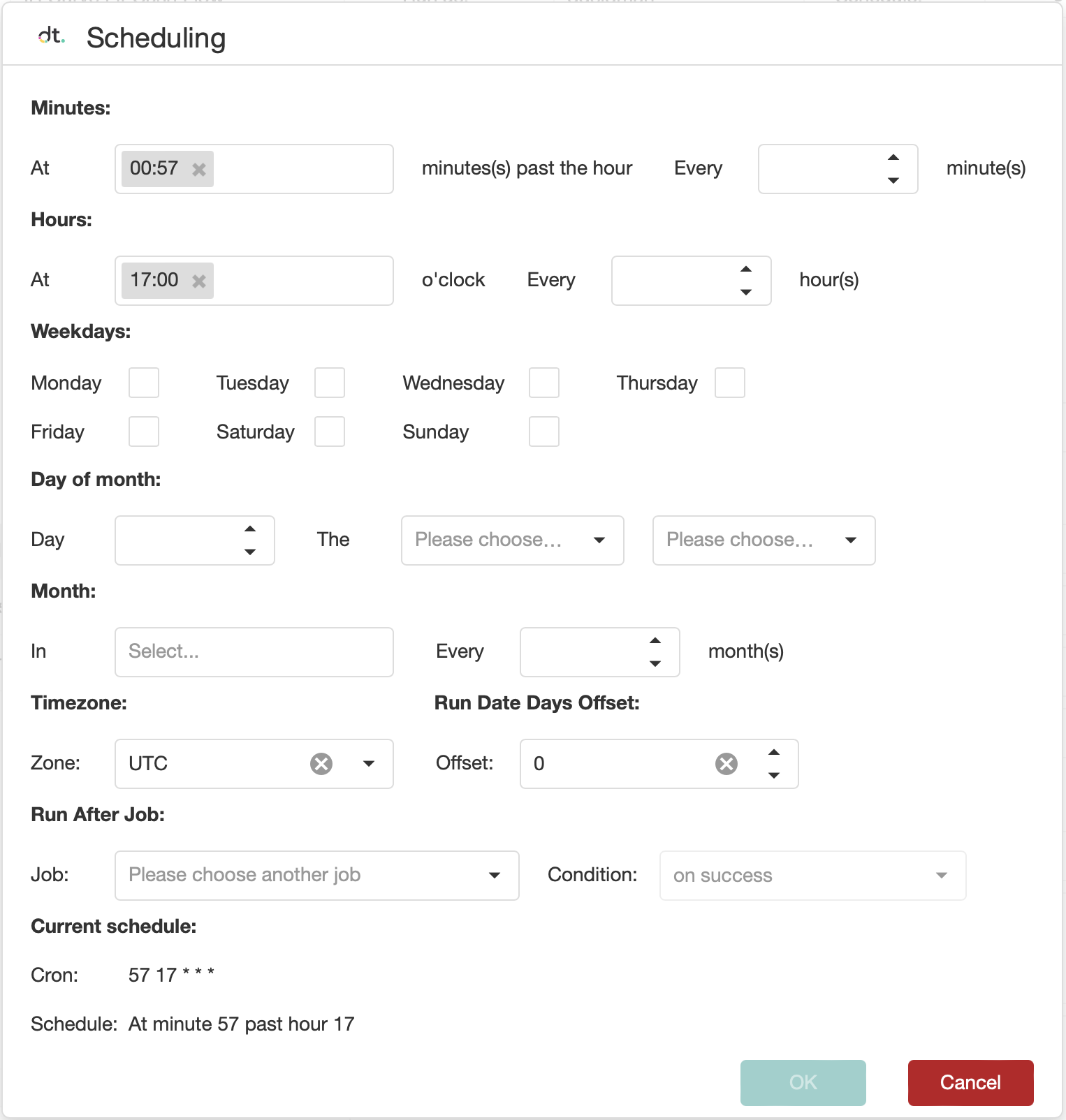

Schedule – Displays the following window in which you can specify the schedule that this batch job is run

Tip – The schedule that you define is represented at the bottom of this window as simple text (in the Schedule field shown above) and in Cron format.

Tip – The schedule that you define is represented at the bottom of this window as simple text (in the Schedule field shown above) and in Cron format.- The Run After Job section enables you to specify that the running of this batch job is dependent on the completion of another Batch Job, specified in the Job field. Condition can be set to "on success", "on failure" or "always".

- The Run Date Days Offset field enables you to schedule the run of a batch as if it were a date in the past. For example, to run a report as if it were yesterday, showing the data of yesterday, enter -1 in the Offset field and likewise, to run a report as if it were a week ago, enter -7 in the Offset field.

-

Allow Kill – Enables Datatailr to perform automatic cost optimization by allowing it to terminate a container on a Virtual Machine (VM) and run it on a less expensive resource (box) when it’s more cost-effective to do so. See Allow Kill for more information.

-

Jobs – The bottom left area lists the jobs that comprise the batch.

Selecting one of these jobs displays the job’s details on the right. For example, the following shows that an Get Cashflows job is selected.

-

Image – Specifies a Datatailr container image to be used to run the job.

-

Entrypoint – Specifies the function in the Python package that serves as the starting point for executing its code.

-

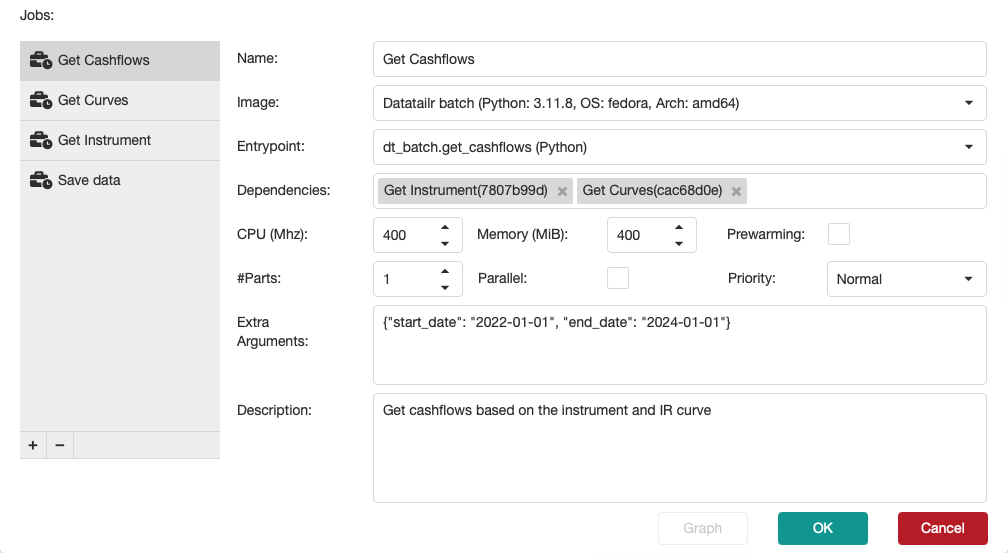

Dependencies The simplest kind of batch runs a single job and has no dependencies. In this case the Dependencies field is empty. When a batch contains multiple jobs, then some jobs in this list may be dependent on the completion of one or more other jobs in this list, which is reflected in the Dependencies field. Jobs that are relied upon by other jobs run first. Jobs with dependencies only commence after the completion of the jobs they depend on. All remaining jobs run simultaneously.

Note – The top-down order in which these jobs are listed does not represent their dependencies.

For example, the above screenshot shows that the Get Cashflows job is dependent on the Get Instrument and Get Curves jobs. This means that both Get Instrument and Get Curves must be completed before the Get Cashflows job can start, because the Get Cashflows job receives an instrument and curves as input arguments from Get Instrument and Get Curves jobs.

For more information about writing batch jobs and implementing this hierarchy in Datatailr, see How to Create Programs – Batch Jobs. -

#Parts – The value of the #Parts field specifies how many instances of this job will run simultaneously.

-

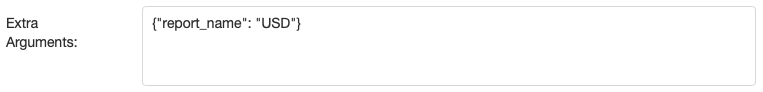

Extra Arguments – This field enables you to parametrize the function in the Python package specified in the Entrypoint field.

The function must be written so that it retrieves and uses this value. If the function is expecting an argument with a value, and it is not provided here, then the function will fail. For example, if a function is expecting a key named report, but report_name is passed instead, then the function will fail.

The arguments specified here must be written as valid JSON, which is passed to the function as a dictionary. The values specified here will be available in that dictionary, but nothing else.

Note – If the entry here is not valid JSON, then

is displayed. For example, as shown below. This value must be fixed or deleted before you can click the OK button to save these arguments.

is displayed. For example, as shown below. This value must be fixed or deleted before you can click the OK button to save these arguments.

-

CPU and Memory – Specifies the resources in MHz and MB that will be assigned to the job.

Tip – Datatailr can automatically multitask multiple instances of a job in order to achieve its results more quickly. The value of the #Parts field specifies how many instances of this job will run simultaneously. For example, if a Python Packages job retrieves the repository of third-party Python packages, which contains approximately 380,000 or more packages, then running 20 instances of this job simultaneously will achieve the result in a fraction of the time. However, we recommend not setting #Parts too high in order to avoid a website mistaking Datatailr for a DDOS (Distributed Denial of Service) attack and blocking it.

Tip – Datatailr can automatically multitask multiple instances of a job in order to achieve its results more quickly. The value of the #Parts field specifies how many instances of this job will run simultaneously. For example, if a Python Packages job retrieves the repository of third-party Python packages, which contains approximately 380,000 or more packages, then running 20 instances of this job simultaneously will achieve the result in a fraction of the time. However, we recommend not setting #Parts too high in order to avoid a website mistaking Datatailr for a DDOS (Distributed Denial of Service) attack and blocking it.

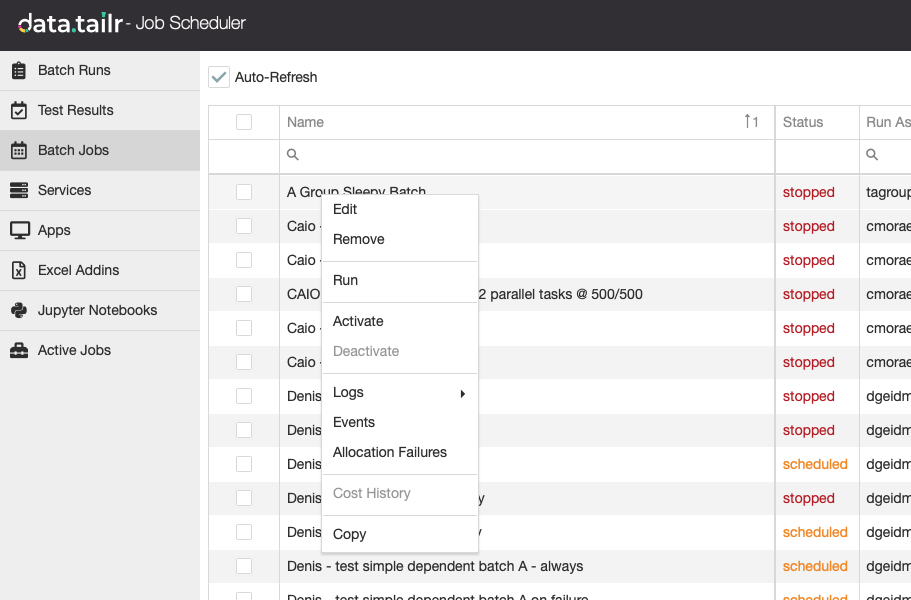

Batch Jobs Right-click Menu

Right-clicking on a batch’s row displays various menu options, as shown below –

This menu includes the following options –

-

Edit – See Editing a Batch Job in Batch Runs.

-

Remove – Removes the job from the list. If the job is running, then it terminates the current run and delete traces of it. Information about all previous runs is retained.

-

Run – Triggers a run of the Batch Job immediately. It may take several minutes for the Batch Job to start running if new instances have to be brought up.

-

Activate and Deactivate – Transitions the Batch Job into "scheduled" or "stopped" state respectively.

-

Logs – See Logs in Batch Runs.

-

Events – See Events in Batch Runs.

-

Allocation failures – Enables you to display information about the batch job’s failure to run because of lack of resources.

-

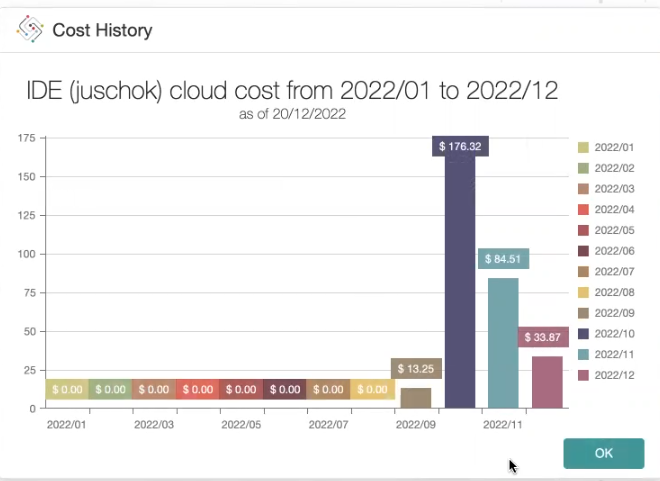

Cost History – Displays the cost of running this job over time.

Note – If a job is the only one running on a machine, then the cost of running that entire box is allocated to that job.

-

Copy – Copies UUID of the Batch Job into clipboard.

Services

This option enables you to start and manage services.

Double-click on a service’s row to display or edit its details. The features here are similar to those described in Batch Jobs. The differences are described below –

- Port – Specifies the port the service exposes for communicating with it (if it has one). Datatailr makes no assumptions about the communication protocol so that you can communicate through this port in whichever protocol you have written, such as Rest, GRPC and so on.

Apps

This option enables you to start and manage apps.

Double-click on an app’s row to display or edit its details. Most of the options here were described in the Deploying the Runnable step of Hello World from Scratch. Because that section described the simplest scenario for getting started, it specified that you leave the default values in these fields. The following describes these fields in detail –

-

Health – Specify a URL that Datatailr can ping (and get any response) in order to verify the health of this runnable. If the app does not respond to the ping at this URL, then Datatailr assumes that it is no longer functional and recycles it, meaning terminates it and spawns a process to replace it.

-

#Containers – Specifies the number of containers of this service that run in parallel for load-balancing or redundancy purposes. This value must be at 1 or higher.

-

#Proc/Cont – Specifies the number of processes that are running inside each container (described above). This value must be 1 or higher.

Excel Addins

This option enables you to start and manage Excel Addins.

Double-click on an Excel Addin’s row to display or edit its details.

In Datatailr, the schedule of Excel Addin jobs is managed in a similar manner to apps, as described in Job Scheduler – Apps. One difference is that for Excel Addins you do not specify the number of Containers or #Proc/Cont. This is because a personal Excel Addin instance is run for each user (one-to-one mapping), since Excel Addins must typically be state-persistent.

Jupyter Notebooks

This option enables you to start and manage Jupyter Notebooks.

Double-click on a Jupyter Notebook’s row to display or edit its details.

In Datatailr, the schedule of Jupyter Notebook jobs is managed in a similar manner to apps, as described in Job Scheduler – Apps. One difference is that for Jupyter Notebook jobs you do not specify the number of Containers or #Proc/Cont. This is because a personal Jupyter Notebook instance is run for each user (one-to-one mapping), since Jupyter Notebook jobs must typically be state-persistent.

Running a Jupyter Notebook as an App

Tip – Here’s the simplest and easiest way to create an app!

Tip – Here’s the simplest and easiest way to create an app!

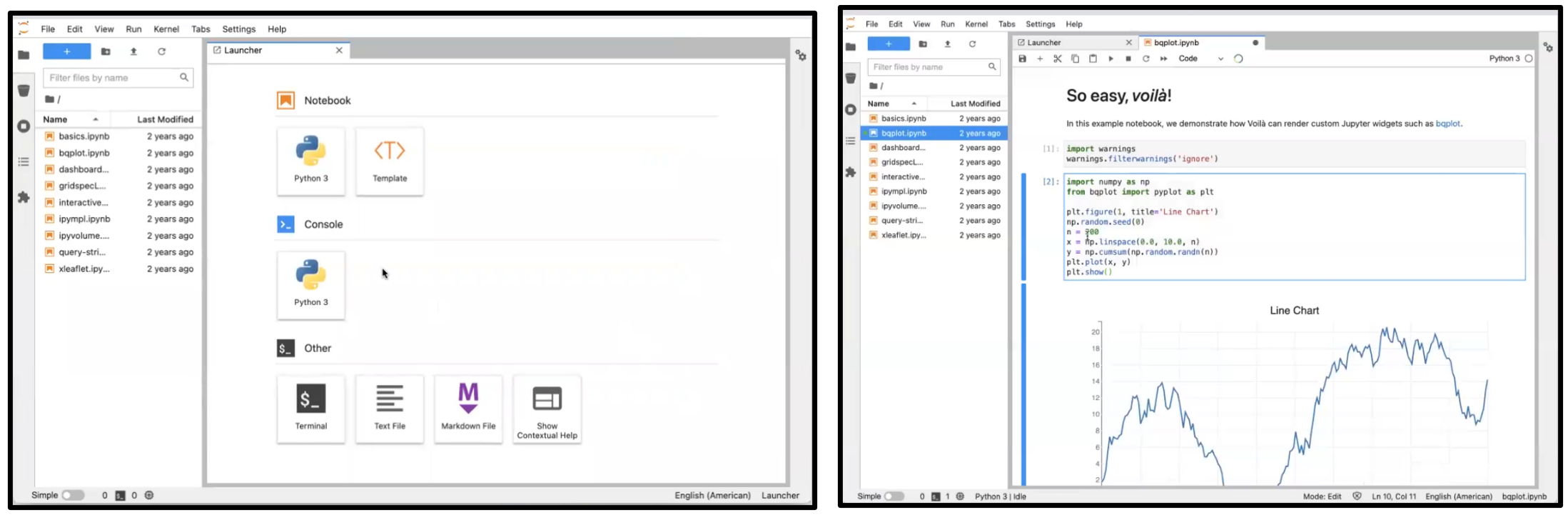

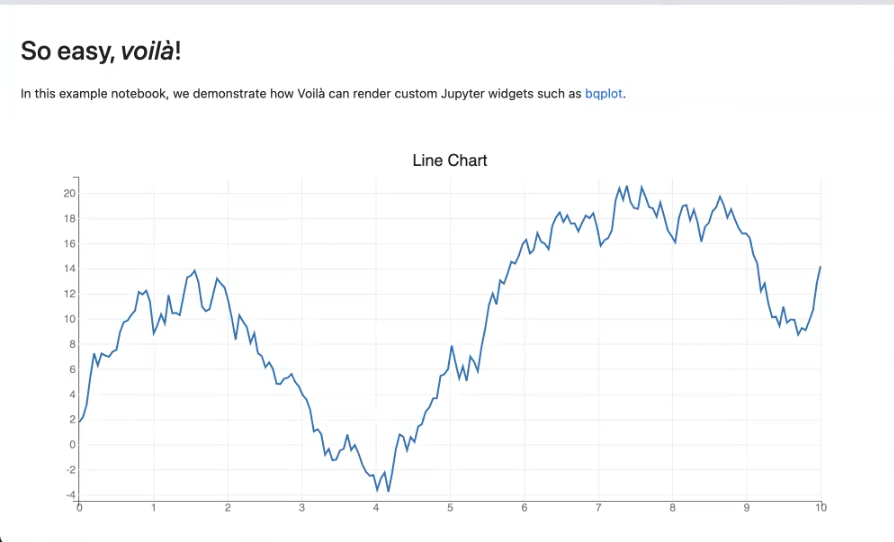

Running a Datatailr Jupyter Notebook can launch a standard Jupyter Notebook user interface. For example, as shown below –

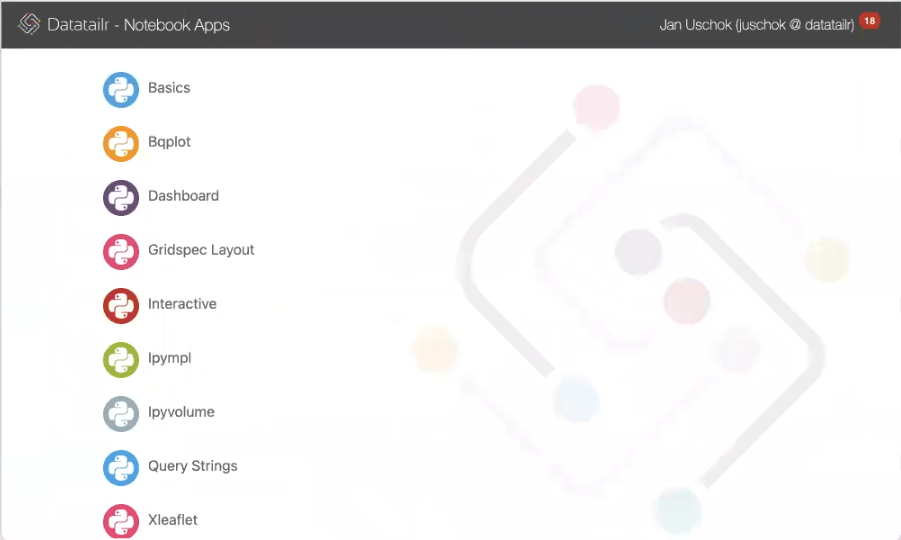

In addition, Datatailr enables you to select the As App option in order to run the Datatailr Jupyter Notebook like any other Datatailr app, which appears in the user’s main window with the very same set of notebooks.

For example, as shown below –

Clicking on any of these notebooks launches it in Datatailr using Voilà™. For example, as shown below –

Note – Voilà is an open-source tool that turns Jupyter Notebooks into standalone web apps that display interactive data visualizations.

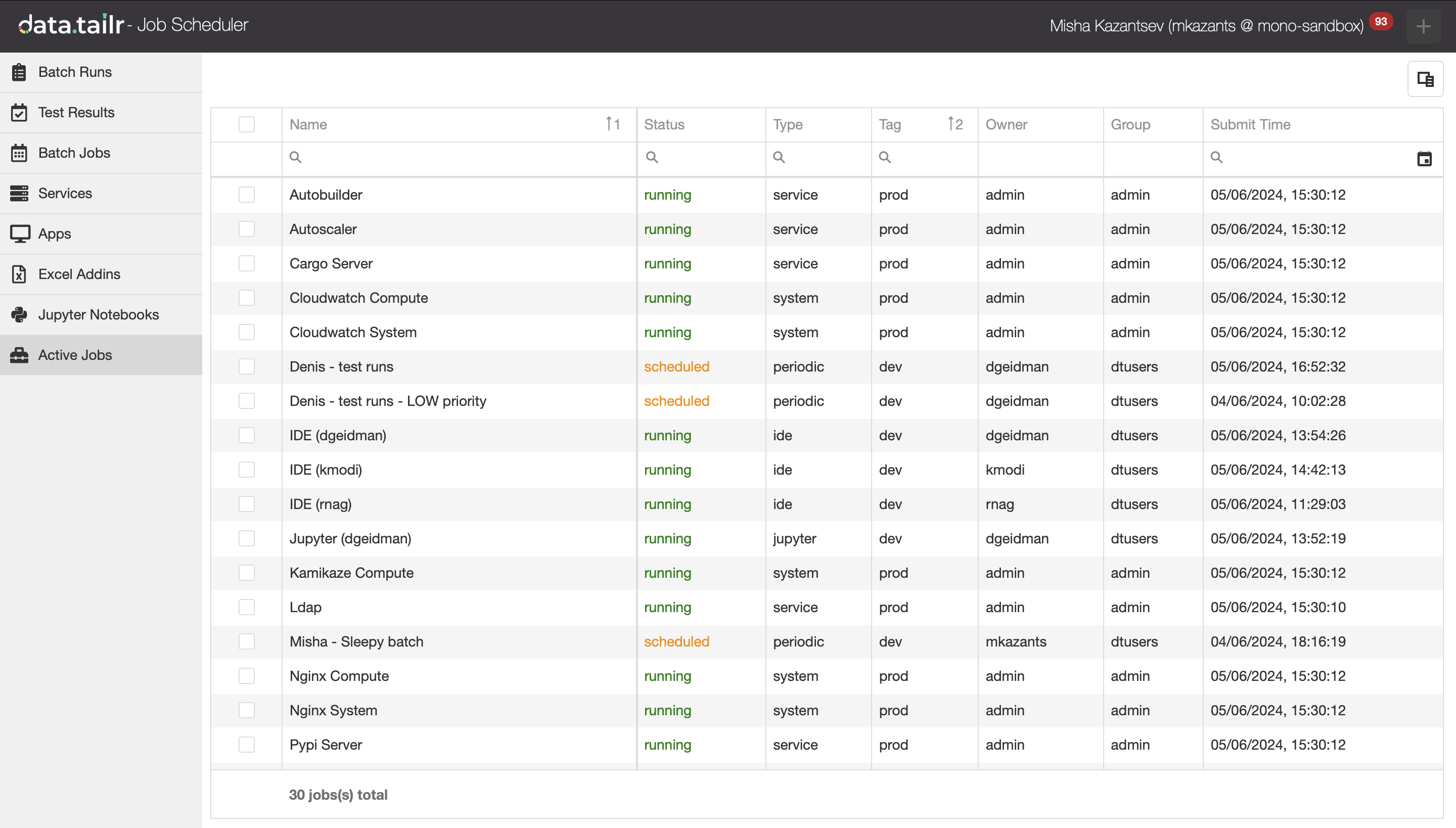

Active Jobs

This option enables you to view all the processes that are currently running in Datatailr, similar to a Task Manager in any OS.

Each row represents an active running process. The following columns of information are provided –

-

Status column indicates whether the process is Running, Dead or Scheduled to be run in the future.

-

Type column indicates which type of process is running – ide, jupyter, batch, periodic (schedule of a batch job), service, excel, app or system.

-

Tag shows a Datatailr environment the job is running within.

-

Owner and Group indicates permissions of the running job.

-

Submit Time is the time when the job was submitted for execution.

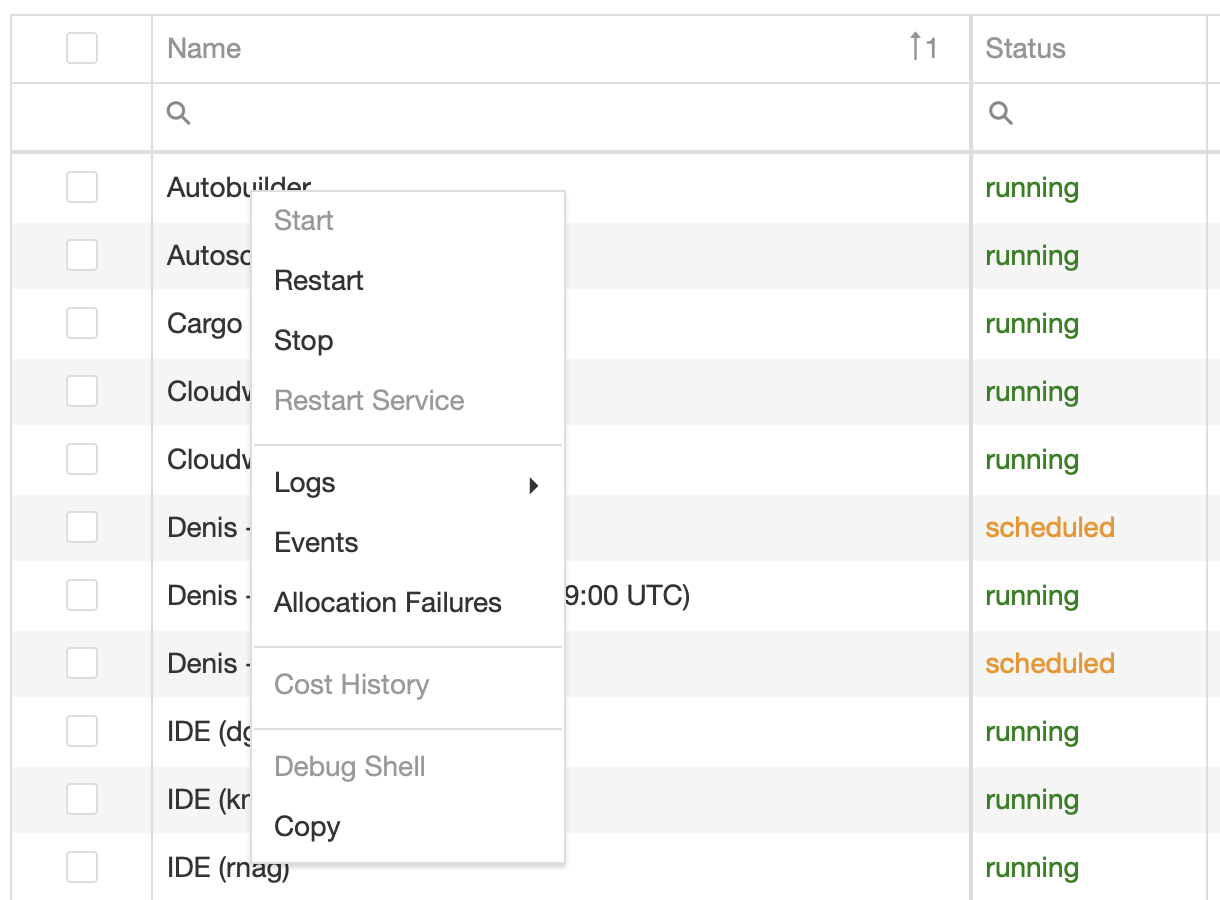

Right-clicking on a row shows the following menu, most of the options were described in Batch Job right click menu and Batch Runs right click menu –

However, the Active Jobs tab also allows you to Start, Stop and Restart jobs based on your permissions. This can be helpful to restart an App or stop the execution of some Batch Jobs that you don't want to be running anymore.

Note – You can bulk-stop multiple jobs at once if you select them using the column with checkboxes prior to clicking "Stop" in the right-click menu.

Restarting a Service

IMPORTANT! We do not recommend using the Restart and Stop menu options on any of the Datatailr jobs, excluding Nginx (compute) and Nginx (system), as described below. If you’re experiencing an issue for which you think you need to use these, then contact Datatailr support. The Restart Service option is intended for Datatailr admins. It enables them to restart the Datatailr service. Datatailr services run on servers named Nginx.

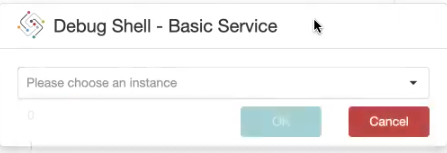

Debug Shell

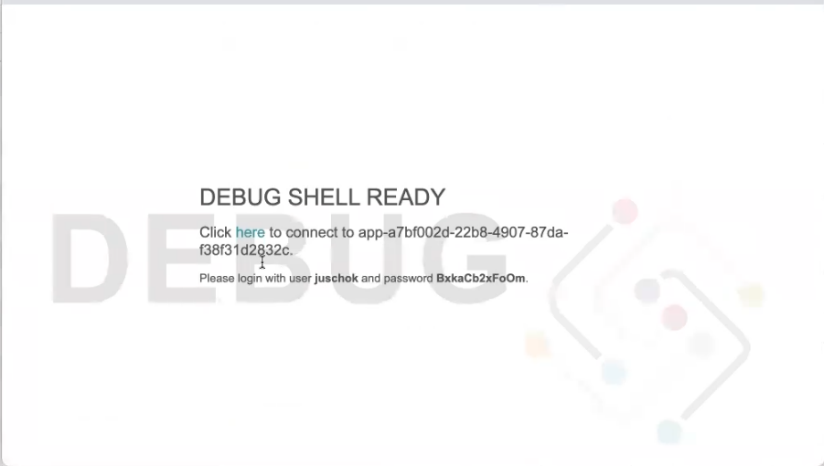

Datatailr enables you to debug a production process by providing debug Shell (terminal) for a service that you own, and not for any Datatailr services. The following displays –

Select an instance of the service from the dropdown menu. The following is displayed –

You will need to log in with your username and a one-time password that is displayed in this window.

IMPORTANT! Make sure to copy this password.

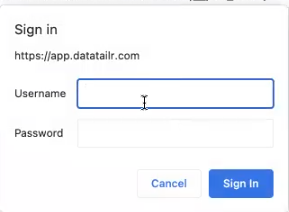

Click the here link in the window shown above. The following is displayed –

Sign in using your username and password that you copied, as described above.

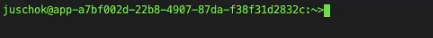

A shell is displayed. For example –

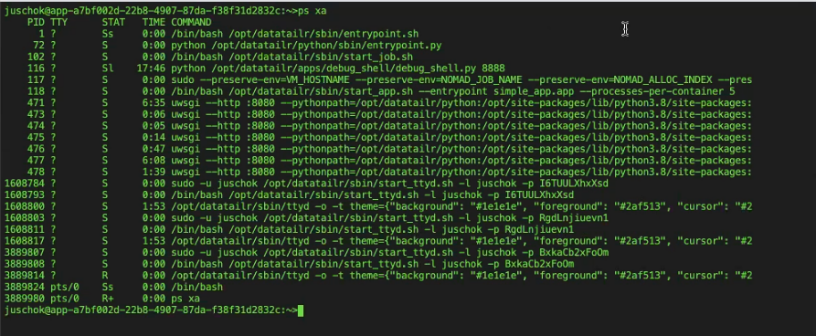

For example, type ps xa to display the following, which may help you debug the app, look at log files, and inspect data that running programs produce.

Updated 3 months ago